AI Infrastructure

Overview

MBUZZ: Pioneering AI Infrastructure Excellence in the Middle East

MBUZZ stands at the forefront of the AI revolution, driving unprecedented advancements in datacenter infrastructure across the Middle East. As a vanguard in the region’s technological landscape, we are transforming the way organizations harness the power of artificial intelligence.

Our position as a premier partner of NVIDIA, the global leader in AI computing, places us in a unique position to deliver unparalleled expertise and cutting-edge solutions. This strategic alliance, combined with our partnerships with other industry titans, enables us to offer a comprehensive suite of AI infrastructure services that are second to none.

Data Centre Designing

NVIDIA DGX & HGX Deployment

Capabilities

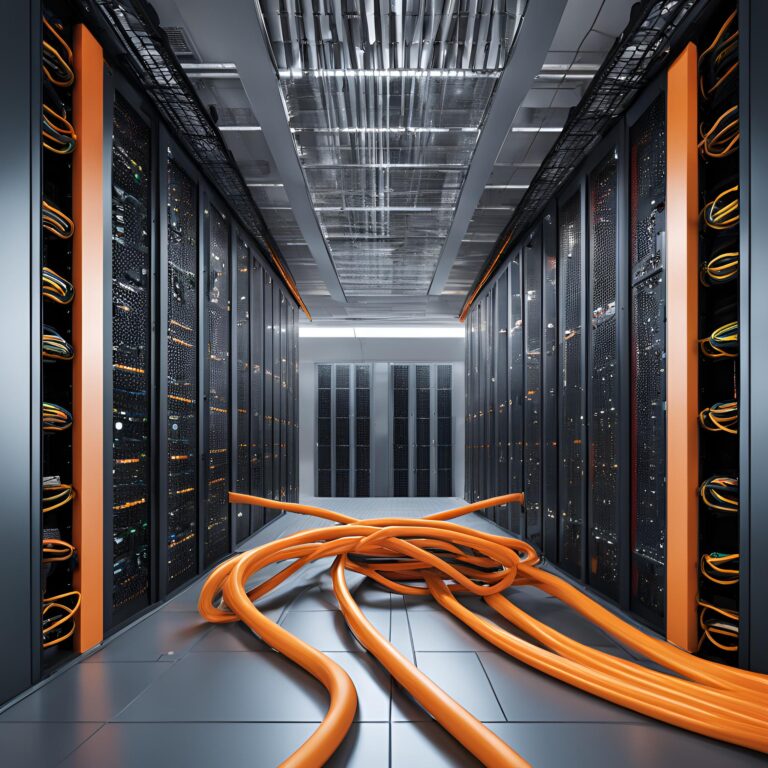

Exascale AI Infrastructure Design

MBUZZ excels in designing and implementing exascale AI computing environments. We architect solutions capable of handling massive datasets and complex AI models, leveraging technologies like NVIDIA’s DGX SuperPOD.

Our designs incorporate advanced cooling systems, including liquid and immersion cooling, to manage the high heat output of dense GPU clusters efficiently. We ensure these systems are optimized for AI workloads while maintaining energy efficiency and scalability.

High-Performance Networking

We specialize in deploying ultra-low latency, high-bandwidth networking solutions crucial for AI workloads. Our expertise includes implementing NVIDIA Mellanox InfiniBand networks, enabling near-zero latency and high bandwidth.

We design network topologies that minimize congestion and optimize data movement between compute nodes, storage systems, and external interfaces.

AI-Optimized Storage Solutions

MBUZZ designs and implements storage infrastructures tailored for AI workflows. We deploy high-performance parallel file systems and object storage solutions that can handle the immense I/O demands of AI training and inference.

Our storage designs incorporate technologies from Vast Data and distributed file systems to provide the throughput and IOPS necessary for large-scale AI operations. We also implement intelligent data tiering and caching strategies to optimize access to frequently used datasets.

AI Workload Orchestration and Management

We provide advanced solutions for managing and orchestrating complex AI workloads across large-scale infrastructure. Our capabilities include implementing Kubernetes environments optimized for GPU workloads, enabling efficient resource allocation and job scheduling. We also support multi-tenancy, allowing different teams or departments to share resources efficiently while maintaining security and isolation.

Our solutions incorporate advanced monitoring and analytics tools, providing real-time insights into GPU utilization, job performance, and system health, enabling proactive management and optimization of AI workloads.

Edge-to-Core AI Integration

MBUZZ offers comprehensive solutions that seamlessly integrate edge computing with core datacenter AI infrastructure. We design hybrid architectures that enable AI model training in the datacenter and efficient deployment at the edge.

Our solutions include implementing distributed learning frameworks, optimizing model compression for edge deployment, and creating secure data pipelines from edge devices to central AI systems. This capability is particularly crucial for applications in smart cities, industrial IoT, and autonomous systems across Saudi Arabia.

Our Approach

General Preparation

Meticulous planning and configuration of HGX systems, storage networks, and cabling plans ensure a smooth deployment process

On-site Preparation

Site surveys, floor space optimization, cabinet location optimization, and PDU installation lay the groundwork for efficient deployment.

Physical Deployment

Installation of HGX systems, networking equipment, and management nodes, followed by meticulous cable deployment.

Logical Configuration

Applying system images, configuring switches, and validating connectivity ensure optimal performance.

Testing & Validation

Includes network validation, unit testing, and stress testing, ensures the reliability and stability of the infrastructure.

Site Documentation

Comprehensive documentation provides detailed insights into hardware and logical configurations

Cutting-Edge Solutions

NVIDIA DeepStream

DeepStream SDK allows you to focus on building optimized Vision AI applications without having to design complete solutions from scratch

NVIDIA Metropolis

AI-powered platform for smart cities that uses computer vision and deep learning to enable real-time analytics of city infrastructure.

NVIDIA Triton

Inference server that simplifies the deployment of AI models at scale, and and optimization for real-time inference across various applications.

How can MBUZZ help with AI Infrastructure?

A foundation that works

We design and implement robust, scalable datacenter architectures tailored for AI workloads. Our solutions ensure your infrastructure can grow with your AI ambitions, providing the computational power and flexibility needed for today's and tomorrow's AI challenges.

DGX & HGX based solutions

As certified NVIDIA partners, we specialize in deploying and optimizing NVIDIA DGX and HGX systems for enterprise AI. Our team has extensive experience in designing, implementing, and managing these powerful GPU-accelerated computing platforms, ensuring you leverage their full potential for your AI initiatives.

Storage

We deploy high-performance, AI-optimized storage systems leveraging industry leaders like Vast Data and NetApp to handle the massive data requirements of modern AI workloads. Our storage solutions incorporate Vast Data's all-flash architecture for unparalleled performance and NetApp's advanced data management capabilities, ensuring lightning-fast data access and processing critical for AI model training and inference. This powerful combination of technologies enables us to design storage infrastructures that provide the speed, scalability, and efficiency demanded by the most demanding AI applications, from real-time analytics to large-scale deep learning.

Partners